What is Remote Sensing

Remote sensing is the science dedicated to obtaining information on the Earth’s surface without being in direct contact with it, using different devices (drones, satellites, aircraft, etc.).

In this case we will be focusing on satellite images that allow us to study the evolution of natural and man-made phenomena, such as volcanic eruptions, earthquakes, forest fires, floods and glacier melting, deforestation or the effects of urban sprawl, and detect their consequences.

The availability of satellite images of the entire world, with a near-daily frequency if atmospheric conditions so permit, allow for the identification and monitoring of natural phenomena and human activity that result in notable changes in the Earth’s surface.

But how are images of the Earth obtained and processed?

Satellites are watching us

Satellites are objects that orbit the Earth with a specific mission: telecommunications (TV, telephones), navigation and location (GPS) or weather (Meteosat). In our case, we will be focusing on the satellites that periodically observe and monitor the Earth.

Surely you have heard of NASA (National Aeronautics and Space Administration), the US agency that captures images of the Earth through a series of satellites called Landsat. Since its first launch, there have been seven missions. Each mission consists of launching into space a constellation of satellites that have various sensors and instruments to monitor the state of the oceans and the atmosphere. These sensors detect the radiation given off by the Earth’s surface in different wave frequencies, which is processed and transformed into information, which we can then analyse.

In Europe, the agency in charge of monitoring the Earth from space is the ESA (European Space Agency), whose Copernicus program is dedicated to observing the Earth. The European Space Agency has developed a group of satellite missions called Sentinel to put in orbit its own satellites.

Sentinel-1

Satellites in this mission follow a polar or sun-synchronous orbit around the Earth, that is, they go from the North Pole to the South Pole and vice versa. They record images day and night regardless of the weather. They contain radar that can capture images even when it is cloudy.

Sentinel-2

They also follow a polar orbit, like the satellites in the Sentinel-1 mission. They have a high-resolution multi-spectral sensor that can capture images to monitor vegetation, soil, bodies of water and coastal areas.

Sentinel-3

This is a platform that contains a wide variety of high-precision, reliable instruments to measure the elevation, temperature and colour of the land and ocean. It can monitor the climate and environment, as well as make maritime forecasts.

Sentinel-5P (Sentinel-5 Precursor)

It aims to replace the Envisat satellite which went astray in 2012. This platform will also be replaced by Sentinel 5, which will be sent in 2021. The Sentinel 5P mission provides atmospheric data that aims to monitor air quality, particularly in relation to pollutants such as ozone, nitrogen dioxide and sulphur dioxide.

In the future the ESA is planning to send out three more Sentinel missions:

This is a Third Generation Meteosat satellite that will monitor our planet’s atmosphere along a polar orbit.

It also will provide data on the composition of the atmosphere and will be embarked on a spaceship, the EUMETSAT Polar System (EPS).

The aim is to continue the missions of the Jason-2 satellite on high-precision topographical calculations on both the Earth’s surface and the ocean.

The Sentinel family... and many more!

We have made special mention of the satellites belonging to the European Space Agency (ESA), but there are many other space agencies, from the US, China, Russia and others, who have missions in space. What is more, in recent years, several companies and organisations have appeared who have satellites orbiting the Earth with the goal of capturing images. All this has caused there to be a lot of debris to be orbiting the Earth, which is known as space junk.

Want to find out more about the Copernicus program?

Sensors can take the Earth's pulse

Principle of remote sensing

Remote sensing is a technique that can measure or acquire data from the Earth’s surface in the form of images. This is possible thanks to sensors mounted on platforms or devices that fly through the airspace, such as aeroplanes or drone, or on space platforms, such as satellites. The basic principle of remote sensing is to capture, through sensors, the electromagnetic radiation that objects reflect.

The Earth gives off electromagnetic radiation

The electromagnetic radiation is the group of electromagnetic waves that are emitted into space by the Earth. Objects that emit these waves are subject to an energy source (generally sunlight) which triggers the release of these waves. The sensors can capture the radiation emitted by the objects after they have been subject to an energy source. There are two types of sensors: passive ones, which record the solar radiation that the objects reflect; and active ones, which emit radiation on the objects and measure its reflection.

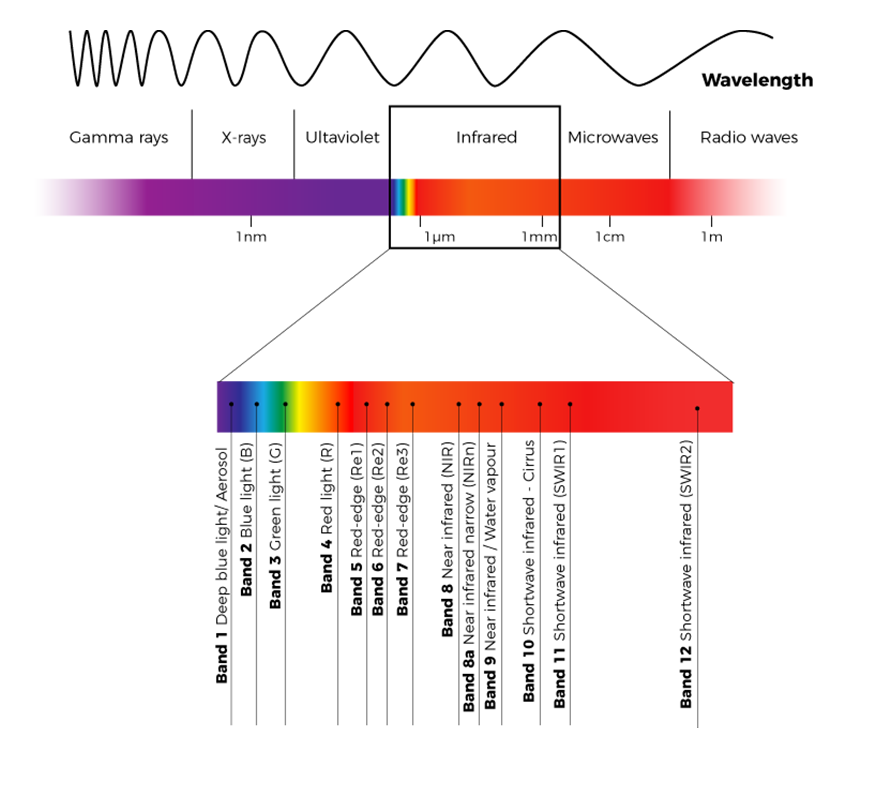

Electromagnetic spectrum Beyond what the naked eye can perceive

The human eye only captures a range of the electromagnetic spectrum between 0.4 and 0.7 microns, that is, light or the visible spectrum. To the left and the right of the visible spectrum is ultraviolet radiation and infrared, respectively.

Different regions of the electromagnetic spectrum can provide different information about the same object. To detect the reflection of objects in these regions or wavelengths, we need specialized sensors that perceive beyond what the human eye can see.

The sensors that most satellites use to observe the Earth can capture electromagnetic radiation from ultraviolet rays to infrared rays; beyond these wavelengths we will need even more specialized sensors such Radarsat, Era-1 and 2 or the SRTM (Shuttle Radar Topography Mission).

The spectral signature, an object's fingerprint

Every object has a spectral signature or the percentage of reflectance in each region of the electromagnetic spectrum. In other words, the spectral signature is the fingerprint that defines every object on the Earth’s surface. That means, for example, snow has a different spectral signature than vegetation or water, as the reflectance is different.

This way, using remote sensing, we can identify or tell the difference between different ground coverage and soil uses, or healthy vegetation from that burnt after a fire, or bodies of water after a flood.

Multiespectral images

As previously mentioned, most satellites capture information in different bands or ranges of the electromagnetic spectrum, not only those of the visible spectrum such as the red, green and blue or RGB colour bands, but also images from ultraviolet radiation to near and short-wave infrared. These bands can be combined to obtain what we know as multispectral images.

Multispectral images allow us to see characteristics of a particular object and differentiate them from others that at first glance seem the same, such as the case of the degree of wetness of vegetation or that burnt after a fire.

Sentinel-2 in the Copernicus programme, for example, has 13 different bands, each with specific capabilities.